AWS MLOps Framework Pre-Packages ML Model Deployment Pipelines

- octobre 06, 2021

Introducing the AWS MLOps Framework

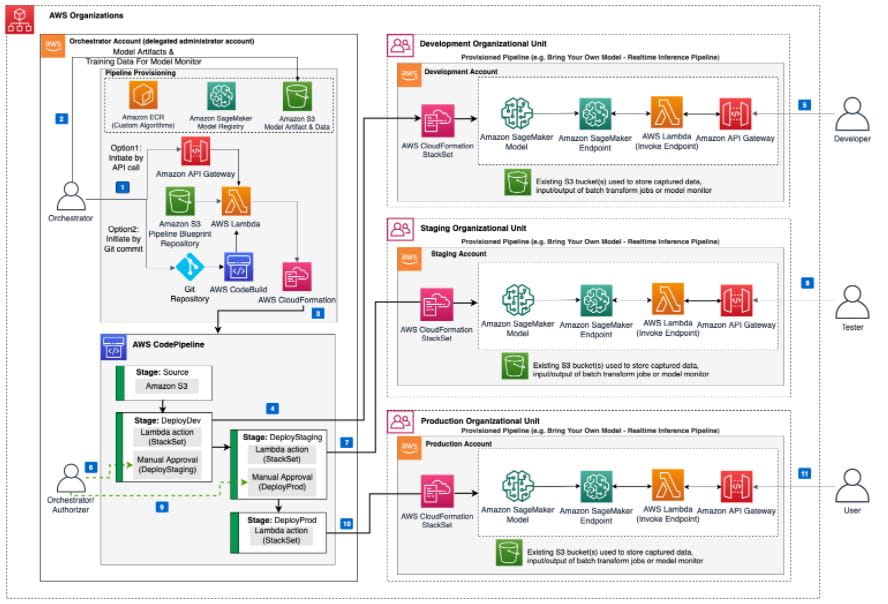

AWS has released a pre-packaged solution, the AWS MLOps Framework, that helps you streamline and enforce architecture best practices for production-ready ML models. It can be easily deployed with an AWS CloudFormation template. The new solution allows users to upload their trained models (also referred to as BYOM - Bring Your Own Model), configure the orchestration of the pipeline, and monitor the pipeline's operations. Though the deployment of the solution is easy, it is worth mentioning that you should have some basic understanding of ML concepts and AWS services like Amazon API Gateway, AWS CloudFormation, Amazon Simple Storage Service (Amazon S3), AWS CodePipeline and Amazon Sagemaker.

How does AWS MLOps Framework add value?

The AWS MLOps Framework can increase a team's agility and efficiency by empowering them to repeat successful processes at scale. Based on your business context, the MLOps Framework offers several ML pipeline types to choose from. In addition, if your team has the necessary DevOps expertise, you can adjust the solution to align with your requirements.To meet most requirements and business needs, the new solution provides two AWS CloudFormation templates -- one for single account and the other for multi-account deployments. It also provides an option to use Amazon SageMaker Model Registry to deploy versioned models. The Amazon Sagemaker Model Registry allows you to catalog models for production, manage model versions, associate metadata with models, manage the approval status of a model, deploy models to production, and automate model deployment with continuous integration and continuous delivery (CI/CD). You can create the pipeline for your custom algorithm or can use the Amazon SageMaker Model Registry

Image courtesy of AWS.

Using the AWS MLOps Framework

The AWS MLOps Framework has two components. The first is the orchestrator component, created by deploying the solution's AWS CloudFormation template. The second is an AWS CodePipeline component created by either calling the API endpoint (created by the orchestrator component) or by committing a configuration file into an AWS CodeCommit repository. Once the orchestrator component is deployed, you can create the pipeline for deploying your ML model on top of that. To create the pipeline, you can either call the orchestrator component API endpoint with pre-defined body content or by committing the mlops-config.json file to the Git repository. To get more insights on the types of pipelines available and other different aspects, you can check this "https://docs.aws.amazon.com/solutions/latest/aws-mlops-framework/api-methods.html" link.

Once the pipeline is deployed, the solution will host your model on Amazon SageMaker and create an endpoint for the same. Apart from the Amazon SageMaker endpoint, you will get another API endpoint which is linked to your deployed model. Using this API endpoint, you can feed new data to the model, getting JSON formatted output in return.

Our take

While the world of ML is ever-evolving, AWS services are too and its MLOps Framework is an illustration of how quickly the company reacts to support changing customer needs. While many ML teams are focused on building accurate models and prefer not to be weighed down by architectural details, we like that AWS has introduced a framework that deploys, tests, and monitors ML models for you. Using the framework, teams can, for example, create an inference endpoint to receive the scoring output of a trained model with drift detection – all as a microservice.

Moreover, with provisioning and pre-configured ML pipelines for development, staging and production, the framework helps teams stay focused on their end goal, not the minutiae of pipeline building and management. While teams today bring their own model to the solution, AWS notes that in the future, they will offer a pipeline where you’ll be able to train your own model in the service. We look forward to that.

Learn more about MLOps:

- What is MLOps?

- Putting MLOps into Action ML Lifecycles and Models

- Streamlit Dashboards Give Data Scientists More Time for Analysis

Keep up to date on the latest technologies, best practices and use cases in the cloud. Subscribe to the NTT DATA Tech Blog below.

Subscribe to our blog