Scale Code Quality with Keptn’s SLO-driven Gates

- février 15, 2022

Volusion, a leader in providing e-commerce solutions to small businesses, began the process of modernizing its e-commerce platform to decrease time to market, improve scalability and extensibility. This journey involved moving the e-commerce platform from a monolithic to microservices architecture. At the beginning, Volusion had challenges building a reliable deployment process. Instead of lengthy manual and error-prone steps, the company wanted to use efficient automation that would fit the new microservices design.

The company wanted to make the application deployment process for its e-commerce platform reliable, fast and data driven. Keptn was chosen as a control plane for DevOps automation of cloud native applications. Keptn uses a simple, declarative approach that allows users to specify DevOps automation flows like delivery or operations automation without scripting all the details. This definition can be shared across any number of microservice services without the need to build individual pipelines and scripts.

Keptn is also popular for its ability to automatically set up a multi-stage environment with built-in quality gates, orchestrating a continuous delivery workflow; it also integrates with testing tools for performance testing, chaos testing, and more. Simply put, Keptn allows you to deploy reliably and confidently with SLO-based quality evaluations. In working with Volusion, Keptn allowed us to quickly build an advanced DevOps platform which would have taken five longer to build by assembling various tools together. Keptn allowed us to deliver more value to our client, faster.

Multi-Cluster Setup

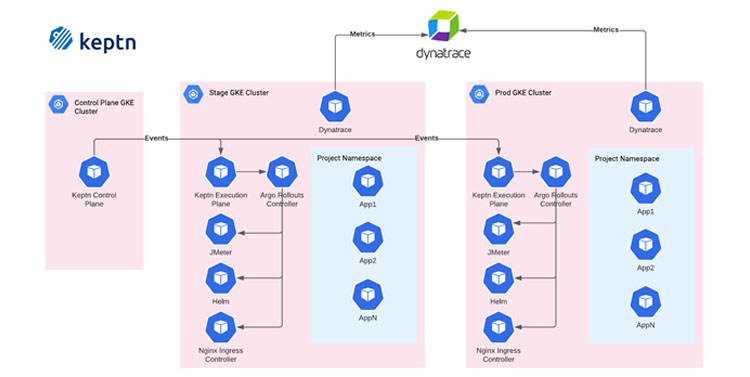

The multi-cluster setup brings more isolation between environments so failures in one environment will not impact the others. We started by setting up Kubernetes clusters for every environment in Google Cloud using Google Kubernetes Engine (GKE). We then established a Terraform Infrastructure as Code (IaC) pipeline to set up the clusters in an automated way.

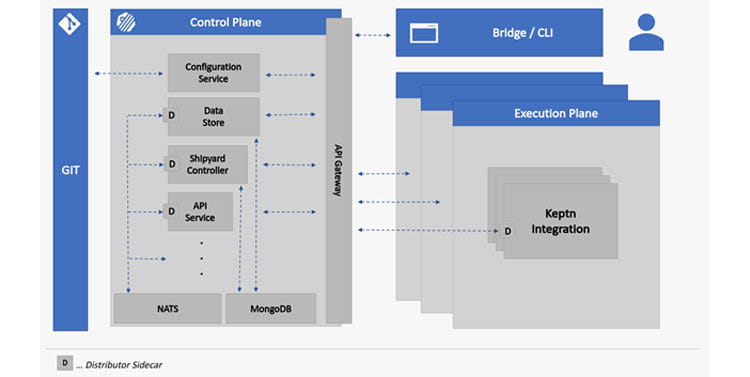

Keptn supports a multi-cluster setup with Keptn Control Plane and Keptn Execution Plane:

- Keptn Control Plane

- The control plane is the minimum set of components required to run Keptn and to manage projects, stages, and services, to handle events, and to provide integration points.

- The control plane orchestrates the task sequences defined in Shipyard, but does not actively execute the tasks.

- Keptn Execution Plane

- The execution plane consists of all Keptn services that are required to process all tasks (like deployment, test, etc.).

- The execution plane is the cluster where you deploy your application and execute certain tasks of a task sequence.

NTT DATA set up a separate Kubernetes cluster for the Keptn Control plane so that it is independent of the environment clusters.

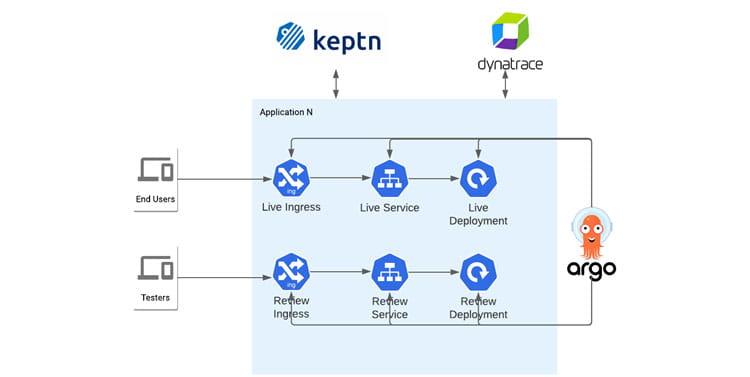

Keptn has many third-party integrations to enrich its continuous delivery use case or to build automated operations. Those services should be installed on the execution plane and can be easily plugged into a task sequence to extend the delivery pipeline. In the above diagram, you can see the services used in the solution: JMeter, Helm Chart, Nginx Ingress Controller, and Argo Rollout Controller.

Monitoring

While we tested both Prometheus and Dynatrace solutions for monitoring, we decided to use Dynatrace because of the stronger user experience and ability to support multi-cluster setups out of the box.

Quality Gates

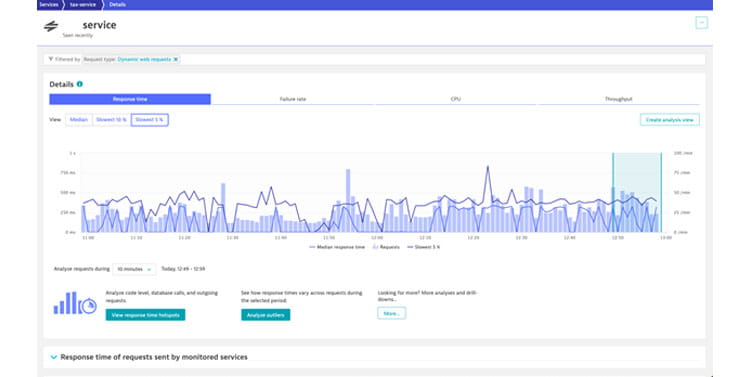

A quality gate answers one question: Does my service meet all defined quality criteria?

Keptn quality gates provide you with a declarative way to define quality criteria of your service. This allows Keptn to collect, evaluate, and score those quality criteria to decide if a new version is allowed to be promoted to the next stage or if it should be held back.

Keptn quality gates are based on the concepts of Service Level Indicators (SLIs) and Service Level Objectives (SLOs). Therefore, it is possible to declaratively describe the desired quality objective for your applications and services.

A Service-Level Indicator (SLI) is a “carefully defined quantitative measure of some aspect of the level of service that is provided” (as defined in the Site Reliability Engineering Book). An example of an SLI is the response time (aka request latency), which is the indicator of how long it takes for a request to respond with an answer. From another side, a service-level objective is “a target value or range of values for a service level that is measured by an SLI.” (as defined in the Site Reliability Engineering Book). For example, an SLO can define that a specific request must return results within 100 milliseconds. Keptn quality gates can comprise several SLOs that are all evaluated and scored, based even on different weights for each SLO to consider the different importance of each SLO.

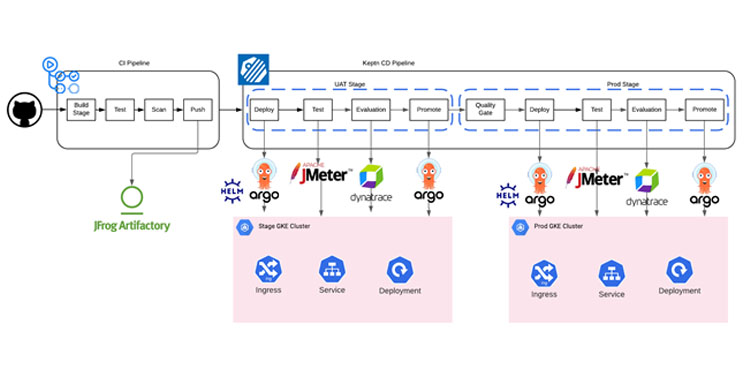

CI/CD Pipeline

For Volusion, the CI pipeline was integrated with GitHub Actions that build application Docker images and pushed to JFrog Artifactory. That process is triggered by new commits that developers push to GitHub repositories. If the CI pipeline succeeds and a new Docker image is pushed to Artifactory, the CD pipeline is triggered. Keptn orchestrates the CD process, including Deploy, Test, Evaluation, and Promotion phases. Between environments, we have quality gates that validate that these requirements are met, and the new release can be promoted to the next environment. Quality gates may include manual approval from User Acceptance Testing or from an authorized individual.

Blue-Green Deployment

Keptn supports Canary and Blue/Green Deployments by using Argo Rollouts. Argo Rollouts is a Kubernetes controller and set of CRDs which provide advanced deployment capabilities such as blue-green, canary, canary analysis, experimentation, and progressive delivery features to Kubernetes.

A Blue-Green deployment has both the new and old versions of the application deployed at the same time. During this time, only the old version of the application will receive production traffic. This allows developers to run tests against the new version before switching live traffic to the new version.

Argo Rollouts has very flexible networking options and supports the following service mesh and ingress solutions:

- AWS ALB Ingress Controller

- Ambassador Edge Stack

- Istio

- Nginx Ingress Controller

- Service Mesh Interface (SMI)

We chose Nginx Ingress Controller as our engineering team is most familiar with the solution and it fits all the requirements.

To learn more about this project, read the case study and watch the replay of a presentation from the November Keptn User Group Meeting. Don’t forget to subscribe to our Tech Blog newsletter below, and we’ll regularly send you posts like this to read!

Subscribe to our blog